Wonder

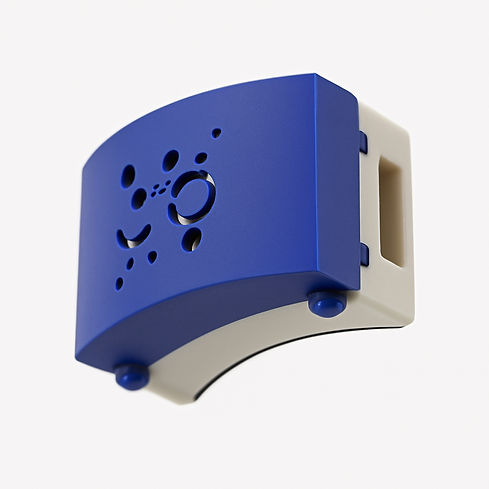

Wonder is a wearable AI device that uses camera vision to help visually impaired people navigate their environment.

It processes camera data to create a map of its environment, and highlights objects of importance to the user using text to speech. The user is able to respond to this or converse with Wonder using voice commands.

Wonder is able to read and translate text, navigate indoors and outdoors and more

The Eyes

We used the OAK D-Lite camera, developed by Luxonis, and utilized its stereo depth sensing features to find the distance of detected objects

The Brain

To run the entire system , we used the NVIDIA Jetson Orin Nano, and on it, we ran SLAM (Simultaneous Localization and Mapping) to build a map in real time of the environment around the user, and gave instructions audibly through earbuds.